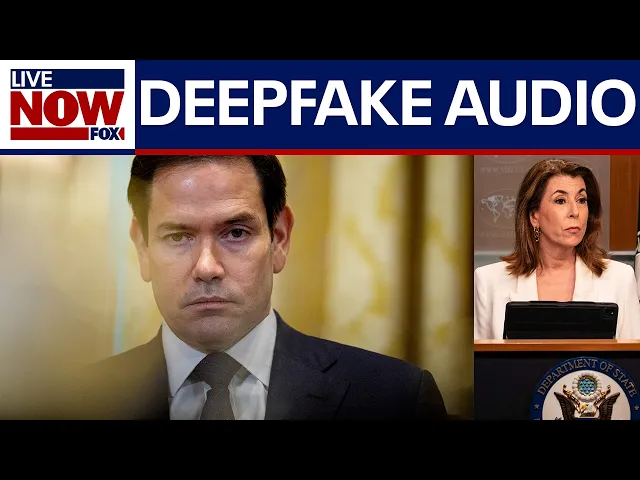

State Department now investigating Sec. Marco Rubio AI impersonator

Deepfake threats demand serious security overhaul

The digital landscape is becoming increasingly treacherous for political figures and businesses alike as AI-generated deepfakes grow more sophisticated by the day. A recent incident involving a deepfake of Secretary of State Marco Rubio demonstrates just how vulnerable our communication channels have become to AI-powered impersonation attacks, forcing us to reconsider our approach to digital identity verification.

Key Points:

- The State Department has launched an investigation into a sophisticated AI impersonation of Secretary Marco Rubio, which fooled multiple foreign diplomats into believing they were communicating with the actual Secretary

- Attackers created convincing video calls where an AI replica of Rubio interacted in real-time with foreign officials, displaying nuanced conversational abilities and visual fidelity

- This incident represents a significant escalation in deepfake technology from previous static images or pre-recorded videos to interactive, real-time impersonation

- The FBI and other intelligence agencies are investigating the origins of the attack, with suspicion falling on nation-state actors rather than criminal organizations

The New Reality of AI Impersonation

The most alarming aspect of this case isn't just that it happened, but how effectively it worked. This wasn't a crude attempt at impersonation but rather a technically sophisticated operation that managed to fool experienced diplomats who regularly interact with Secretary Rubio. The AI impersonator maintained convincing conversation flow, exhibited appropriate diplomatic knowledge, and even replicated Rubio's mannerisms and speech patterns with uncanny accuracy.

This represents a watershed moment in digital security. We've moved beyond the era where deepfakes were easily identifiable by technical glitches or contextual inconsistencies. Today's AI impersonation tools have crossed a threshold where even trained professionals can be deceived in real-time interactions—a capability that fundamentally undermines our traditional trust mechanisms in digital communication.

"We're entering an era where seeing and hearing can no longer be believing," noted one cybersecurity expert familiar with the case. "When foreign officials who regularly interact with Secretary Rubio can't distinguish between him and an AI replica, we've entered uncharted territory for national security."

The Business Implications Are Enormous

While this attack targeted a high-profile political figure, the technology poses equally significant threats to businesses

Recent Videos

How To Earn MONEY With Images (No Bullsh*t)

Smart earnings from your image collection In today's digital economy, passive income streams have become increasingly accessible to creators with various skill sets. A recent YouTube video cuts through the hype to explore legitimate ways photographers, designers, and even casual smartphone users can monetize their image collections. The strategies outlined don't rely on unrealistic promises or complicated schemes—instead, they focus on established marketplaces with proven revenue potential for image creators. Key Points Stock photography platforms like Shutterstock, Adobe Stock, and Getty Images remain viable income sources when you understand their specific requirements and optimize your submissions accordingly. Specialized marketplaces focusing...

Oct 3, 2025New SHAPE SHIFTING AI Robot Is Freaking People Out

Liquid robots will change everything In the quiet labs of Carnegie Mellon University, scientists have created something that feels plucked from science fiction—a magnetic slime robot that can transform between liquid and solid states, slipping through tight spaces before reassembling on the other side. This technology, showcased in a recent YouTube video, represents a significant leap beyond traditional robotics into a realm where machines mimic not just animal movements, but their fundamental physical properties. While the internet might be buzzing with dystopian concerns about "shape-shifting terminators," the reality offers far more promising applications that could revolutionize medicine, rescue operations, and...

Oct 3, 2025How To Do Homeless AI Tiktok Trend (Tiktok Homeless AI Tutorial)

AI homeless trend raises ethical concerns In an era where social media trends evolve faster than we can comprehend them, TikTok's "homeless AI" trend has sparked both creative engagement and serious ethical questions. The trend, which involves using AI to transform ordinary photos into images depicting homelessness, has rapidly gained traction across the platform, with creators eagerly jumping on board to showcase their digital transformations. While the technical process is relatively straightforward, the implications of digitally "becoming homeless" for entertainment deserve careful consideration. The video tutorial provides a step-by-step guide on creating these AI-generated images, explaining how users can transform...