back

Get SIGNAL/NOISE in your inbox daily

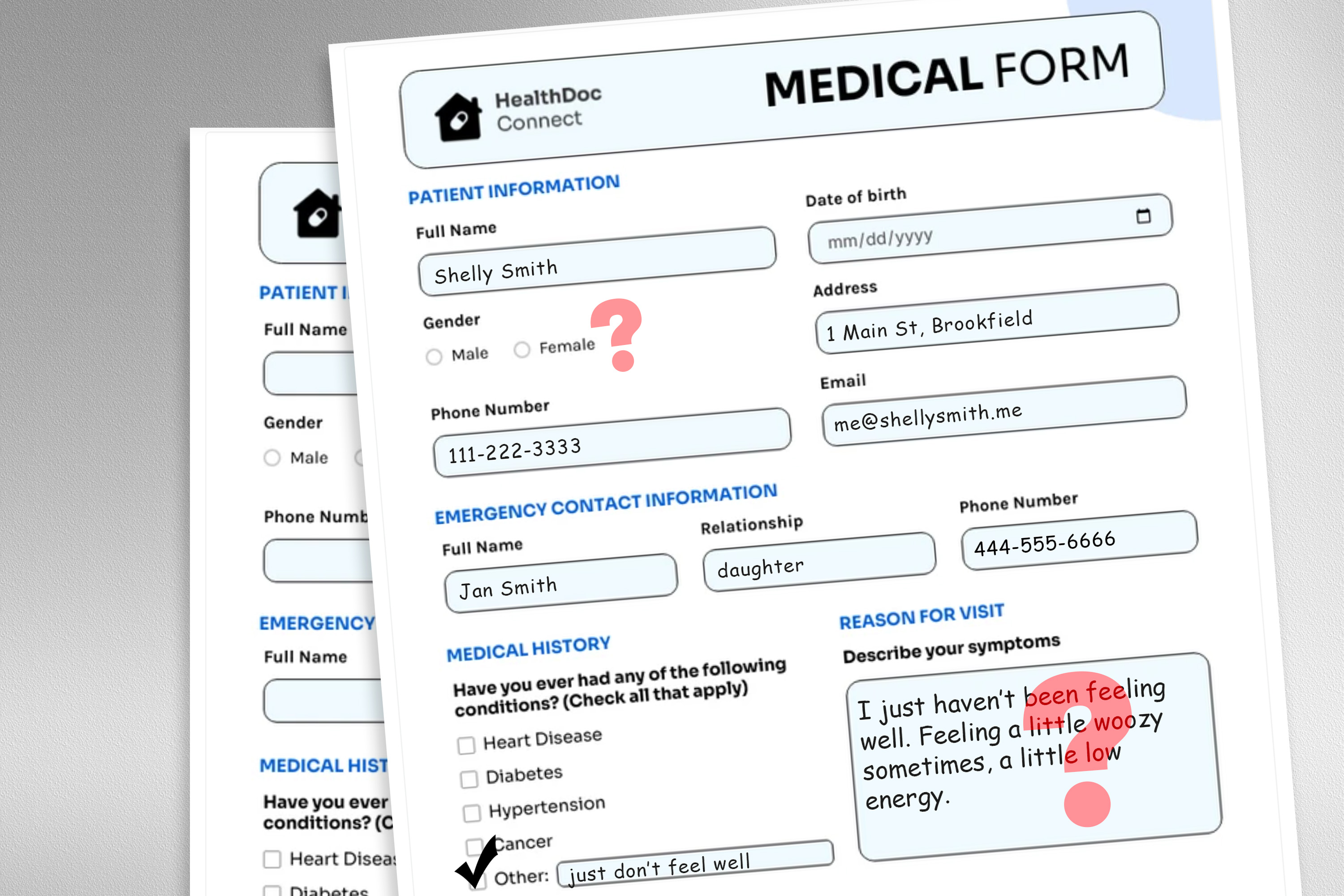

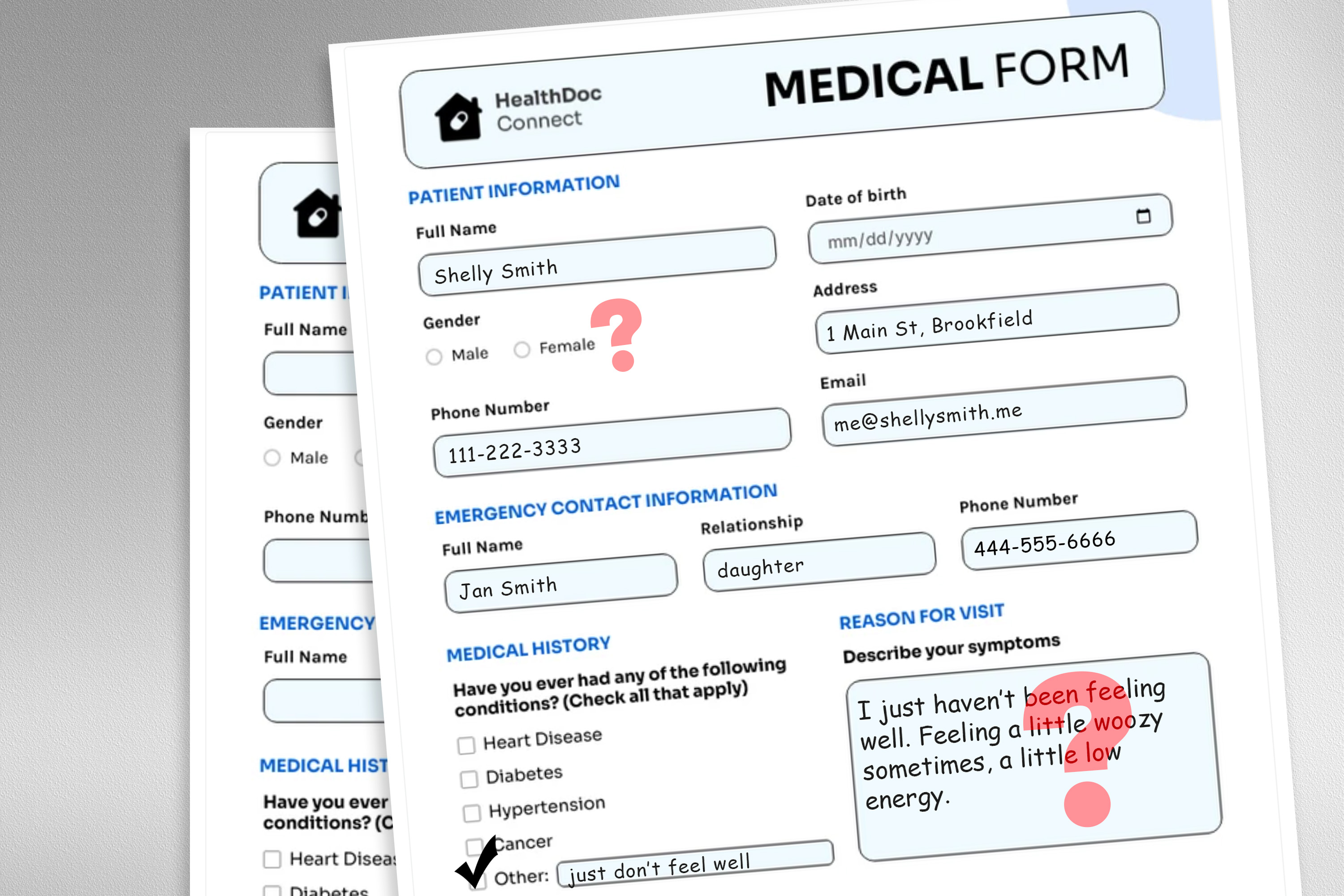

An MIT study finds non-clinical information in patient messages, like typos, extra whitespace, or colorful language, can reduce the accuracy of a large language model deployed to make treatment recommendations. The LLMs were consistently less accurate for female patients, even when all gender markers were removed from the text.

Recent Stories

Jan 19, 2026

App Store apps are exposing data from millions of users

An effort led by security research lab CovertLabs is actively uncovering troves of (mostly) AI-related apps that leak and expose user data.

Jan 19, 2026Stop ignoring AI risks in finance, MPs tell BoE and FCA

Treasury committee urges regulators and Treasury to take more ‘proactive’ approach

Jan 19, 2026OpenAI CFO Friar: 2026 is year for ‘practical adoption’ of AI

OpenAI CFO Sarah Friar said the company is focused on "practical adoption" in 2026, especially in health, science, and enterprise.